Artificial intelligence relies on machine learning, which often requires large amounts of data to function effectively. However, in many contexts, particularly in Africa where local databases are sometimes limited, learning how to train AI with limited data is essential. Fortunately, there are effective strategies to overcome this challenge.

1. Leverage Data Augmentation Techniques

Data augmentation involves artificially generating new data from an existing dataset. This is particularly useful for images and text. For example:

- Images: Images can be rotated, their brightness changed, or their color modified to enrich the training base.

- Text: Techniques such as paraphrasing, machine translation, and removing unnecessary words can generate more textual data.

By applying these methods, a small dataset can be multiplied and AI training can be made more efficient.

2. Use pre-trained models

Instead of training an AI from scratch, we can leverage pre-trained models (such as GPT, BERT, ResNet) developed by tech giants. These models have already been trained on millions of data sets and can be adapted to specific needs using a technique called fine-tuning.

For example, a Senegalese company wishing to develop a chatbot in Wolof could adapt an existing language processing model by providing it with a few hundred examples specific to the local context.

3. Apply transfer learning

Transfer learning involves reusing part of an existing model for a new task. This approach is particularly effective when data is scarce because it allows us to leverage the knowledge of a previously trained model.

For example, an AI model trained to recognize objects in an urban environment can be adapted to identify features in a rural environment by adding a small set of new, specific images.

4. Rely on synthetic data generation

When real data is insufficient, synthetic data can be generated using specific algorithms or AI. For example:

- In computer vision, simulation software can be used to create artificial images.

- In language processing, AIs like ChatGPT can generate realistic dialogues to train a chatbot model.

5. Favor few-shot learning and zero-shot learning

Some modern AI architectures, such as GPT-4, can learn with very few examples (few-shot learning) or even without specific examples (zero-shot learning). This allows AI to be used without requiring large training databases.

An African entrepreneur developing a voice assistant in a local language can test this approach by providing a few well-chosen examples instead of building a large dataset.

6. Leverage active learning

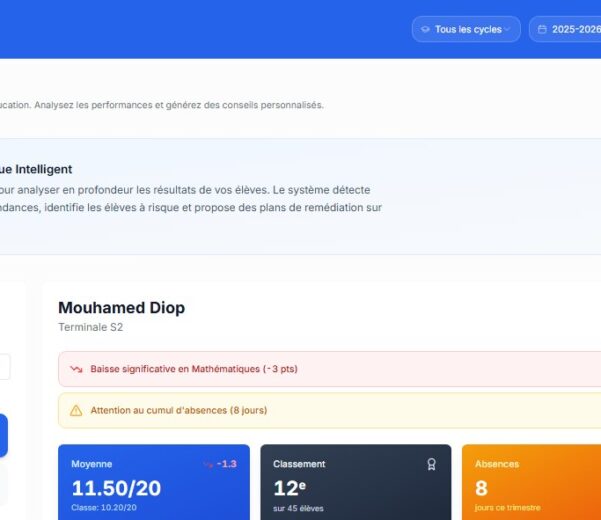

Active learning involves asking a human to label only the most useful data. Rather than annotating thousands of examples, the AI identifies the cases where it has the most doubts, and an expert intervenes to refine the learning. This significantly reduces the need for data. For example, a Senegalese startup developing an AI for medicinal plant recognition can start with a small database and refine its model with the help of botanical experts.

Training an AI with little data is not impossible. Thanks to techniques such as data augmentation, transfer learning, pre-trained models, and active learning, African companies can develop effective AI solutions, even with limited resources. Jangaan Tech supports companies in the adoption of AI and intelligent automation. If you are interested in integrating AI into your project without having to own huge databases, contact us.